I’ve always assumed many of these are just editting element text, but mobile that seems more effort than worth. Is there a way to quickly confirm them if not using/having access to the feature?

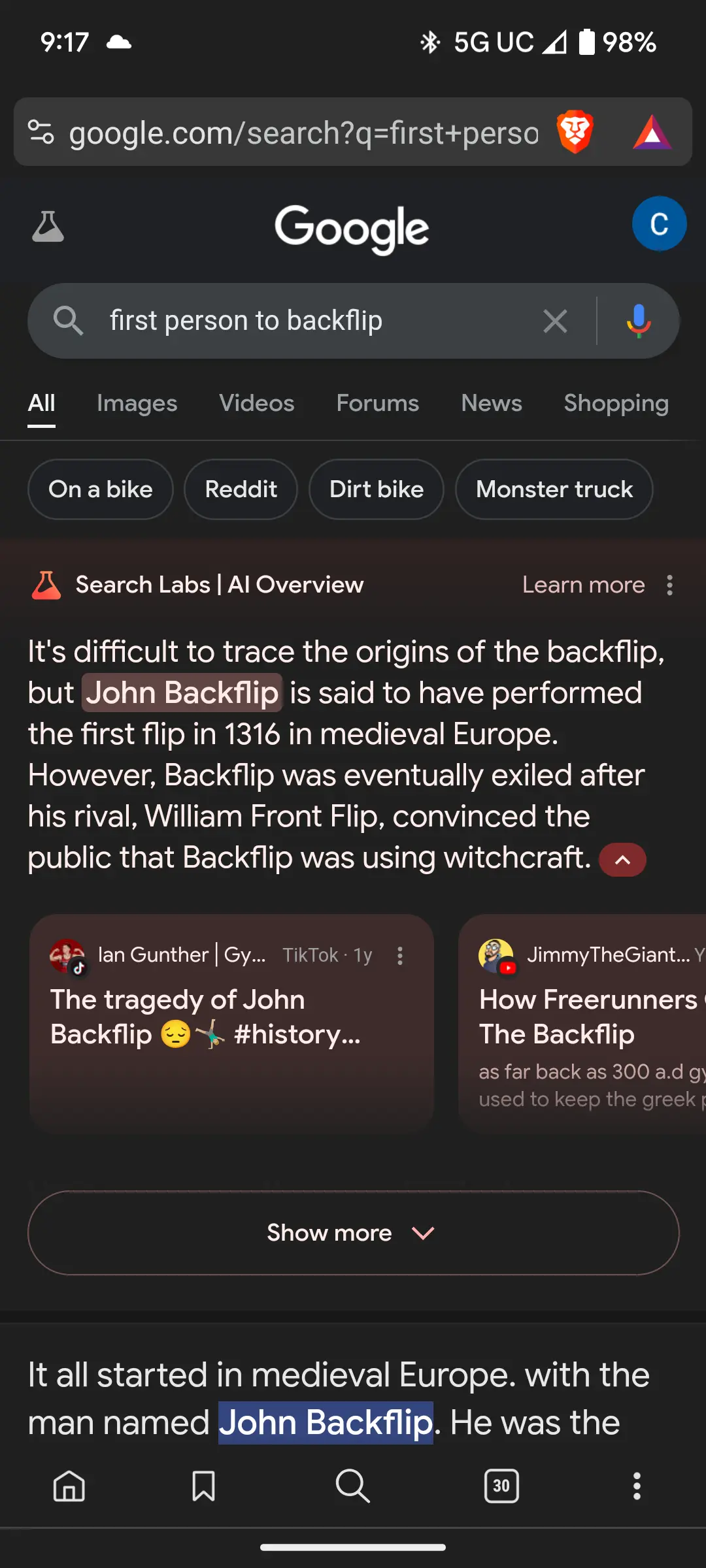

Tbh I always assumed these AI search results posts were fake. But I just did the search and got the same weird result from TikTok highlighted at the top.

This is a different one. The tiktok one is just ripping the text from tiktok, and google has had this feature for yeaaaars. It’s just embedding the content on the page.

The one from the OP is real too and that’s using this feature called “SearchLabs AI” which is written by AI

Assuming AI Overview does not cache results, they would be generated at search-time for each user and “search-event” independently. Even recreating the same prompt would not guarantee a similar AI Overview, so there’s no way to confirm.

Edit: See my comment below for what I actually meant to say

My bad, I wasn’t precise enough with what I wanted to say. Of course you can confirm (with astronomically high likelihood) that a screenshot of AI Overview is genuine if you get the same result with the same prompt.

What you can’t really do is prove the negative. If someone gets an output then replicating their prompt won’t necessarily give you the same output, for a multitude of reasons. e.g. it might take all other things Google knows about you into account, Google might have tweaked something in the last few minutes, the stochasticity of the model is leading to a different output, etc.

Also funny you bring up image generation, where this actually works too in some cases. For example they used the same prompt with multiple different seeds and if there’s a cluster of very similar output images, you can surmise that an image looking very close to that was in the training set.

I do that with LLM a fair bit. If just using GPTs website for something that should be simple, I often prompt the same thing several times and choose the best iteration as a base.

I’ve always assumed many of these are just editting element text, but mobile that seems more effort than worth. Is there a way to quickly confirm them if not using/having access to the feature?

I did a search and got a similar result:

Tbh I always assumed these AI search results posts were fake. But I just did the search and got the same weird result from TikTok highlighted at the top.

Google really should remove this “feature”

This is a different one. The tiktok one is just ripping the text from tiktok, and google has had this feature for yeaaaars. It’s just embedding the content on the page.

The one from the OP is real too and that’s using this feature called “SearchLabs AI” which is written by AI

Welp, that’s enough for me to assume 80% of these be real lol. Now I feel like I’m missing out, but I don’t think I am.

I got exactly the same thing.

AI has no concept of satire, which in my view is a good thing, as it makes people question just how accurate the information being provided really is.

AI has no concept.

LLMs are nothing more than “spicy autocomplete”.

It’s real

We’re in the shitpost community, even if it’s fake it’s still funny?

Being in the EU, i can’t check myself, but in the answers someone could reproduce it.

Sadly it’s not fake. Just go do the search on google:

This is not the AI result, just an embed from a tiktok post.

Assuming AI Overview does not cache results, they would be generated at search-time for each user and “search-event” independently. Even recreating the same prompt would not guarantee a similar AI Overview,

so there’s no way to confirm.Edit: See my comment below for what I actually meant to say

Multiple people in this thread, including myself, have the exact same tiktok meme quote as results for that prompt.

“AI Overciew” is not the same as randomized image generation.

My bad, I wasn’t precise enough with what I wanted to say. Of course you can confirm (with astronomically high likelihood) that a screenshot of AI Overview is genuine if you get the same result with the same prompt.

What you can’t really do is prove the negative. If someone gets an output then replicating their prompt won’t necessarily give you the same output, for a multitude of reasons. e.g. it might take all other things Google knows about you into account, Google might have tweaked something in the last few minutes, the stochasticity of the model is leading to a different output, etc.

Also funny you bring up image generation, where this actually works too in some cases. For example they used the same prompt with multiple different seeds and if there’s a cluster of very similar output images, you can surmise that an image looking very close to that was in the training set.

The best tools are inconsistent!

I do that with LLM a fair bit. If just using GPTs website for something that should be simple, I often prompt the same thing several times and choose the best iteration as a base.